A concept design for Father's Day 2021

Completed in Blender/Krita

Pixiv Link: https://www.pixiv.net/en/artworks/91842943

#ad

A concept design for Father's Day 2021

Completed in Blender/Krita

Pixiv Link: https://www.pixiv.net/en/artworks/91842943

#ad

Happy Mother's Day 2021

Completed in Blender/Krita

Pixiv Link: https://www.pixiv.net/en/artworks/89712199

#ad

Following blog post shows one way to allow one Kubernetes service to generate configs and share with other services. It may not be the best approach, but just one approach to allow the share to happen.

minikube start --vm-driver=kvm --extra-config=apiserver.Authorization.Mode=RBAC

Create a namespace with a role to access all secrets info in a ymal file

cat > create_default_namespace_role.yaml << END

kind: Namespace

apiVersion: v1

metadata:

name: dummy

labels:

name: dummy

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

namespace: dummy

name: dummy-default-role

rules:

- apiGroups: ["*"]

resources: ["*"]

verbs: ["*"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: dummy-default-rolebinding

namespace: dummy

subjects:

- kind: ServiceAccount

name: default

namespace: dummy

roleRef:

kind: Role

name: dummy-default-role

apiGroup: rbac.authorization.k8s.io

END

Create the namespace and default rolekubectl create -f create_default_namespace_role.yaml

Prepare a docker image for datastore service, during the line where auto generated credential is exposed, please do following

DUMMY_ACCESS_KEY='keyid'

DUMMY_ACCESS_SECRET='keysecret'

curl -X POST -H "Content-Type:application/json" -H "Authorization: Bearer $(cat /var/run/secrets/kubernetes.io/serviceaccount/token)" -k https://${KUBERNETES_PORT_443_TCP_ADDR}/api/v1/namespaces/$(cat /var/run/secrets/kubernetes.io/serviceaccount/namespace)/secrets -d "{\"apiVersion\":\"v1\",\"data\":{\"DUMMY_ACCESS_KEY\":\"$(echo ${DUMMY_ACCESS_KEY} | base64)\",\"DUMMY_ACCESS_SECRET\":\"$(echo ${DUMMY_ACCESS_SECRET} | base64)\"},\"kind\":\"Secret\",\"metadata\":{\"name\":\"dummy-admin-credential\"}}"

Note: The data fields will require base64 encoded before can pass into the API

DUMMY_ACCESS_KEY='keyid'

DUMMY_ACCESS_SECRET='keysecret'

curl -X PUT -H "Content-Type:application/json" -H "Authorization: Bearer $(cat /var/run/secrets/kubernetes.io/serviceaccount/token)" -k https://${KUBERNETES_PORT_443_TCP_ADDR}/api/v1/namespaces/$(cat /var/run/secrets/kubernetes.io/serviceaccount/namespace)/secrets/dummy-admin-credential -d "{\"apiVersion\":\"v1\",\"data\":{\"DUMMY_ACCESS_KEY\":\"$(echo ${DUMMY_ACCESS_KEY} | base64)\",\"DUMMY_ACCESS_SECRET\":\"$(echo ${DUMMY_ACCESS_SECRET} | base64)\"},\"kind\":\"Secret\",\"metadata\":{\"name\":\"dummy-admin-credential\"}}"

kubectl get dummy-admin-credential -o yaml --namespace dummy

and base64 decode the DUMMY_ACCCESS_KEY and DUMMY_ACCESS_SECRT

curl -X GET -H "Content-Type:application/json" -H "Authorization: Bearer $(cat /var/run/secrets/kubernetes.io/serviceaccount/token)" -k https://${KUBERNETES_PORT_443_TCP_ADDR}/api/v1/namespaces/$(cat /var/run/secrets/kubernetes.io/serviceaccount/namespace)/secrets/dummy-admin-credential

Cannot find device 'Wacom Cintiq 13HD stylus'.

Cannot find device 'Wacom Cintiq 13HD eraser'.

xsetwacom --list devices

Wacom Cintiq 13HD Pen stylus id: 14 type: STYLUS

Wacom Cintiq 13HD Pen eraser id: 15 type: ERASER

Wacom Cintiq 13HD Pad pad id: 16 type: PAD

#!/bin/bash## Toggles which screen the cintiq is mapped to.if [ "$(cat ~/.wacom-mapping)" -eq 0 ];thenecho 1xsetwacom set "Wacom Cintiq 13HD Pen stylus" MapToOutput "HEAD-1"xsetwacom set "Wacom Cintiq 13HD Pen eraser" MapToOutput "HEAD-1"echo 1 > ~/.wacom-mappingelseecho 0xsetwacom set "Wacom Cintiq 13HD Pen stylus" MapToOutput "HEAD-0"xsetwacom set "Wacom Cintiq 13HD Pen eraser" MapToOutput "HEAD-0"echo 0 > ~/.wacom-mappingfi

[localhost ~]$ wacom-toggle-mapping1

sudo dnf upgrade

sudo dnf install dnf-plugin-system-upgrade

sudo dnf system-upgrade download --releasever=23 --best

sudo dnf erase *nvidiaand then redo

sudo dnf system-upgrade download --releasever=23 --best

sudo dnf system-upgrade rebootAfter that, I am able to successfully update.

sudo dnf --allowerasing --releasever=22 downgrade xorg-x11-server-Xorg

sudo cat exclude=xorg-x11* >> /etc/dnf/dnf.conf

sudo cat exclude=xorg-x11* >> /etc/dnf/dnf.conf

sudo reboot now

ctl + Alt + F2Login as a user that have sudo previlige

sudo dnf -y install lightdm system-switch-displaymanager

sudo system-switch-displaymanager lightdm

sudo reboot nowAfter that, it should have lightdm to prompt login and one should have Fedora 23 + nVidia proprietary driver.

sudo cat exclude=xorg-x11* >> /etc/dnf/dnf.confOnce there is an offical X.Org 1.18 release and offical nVidia release to support it. (Also switch back lightdm to gdm if it is fixed.)

https://github.com/openshift/origin

sudo dnf install vagrant vagrant-libvirt

vagrant plugin install vagrant-openshift

sudo dnf install docker

sudo dnf install nfs-utils

sudo firewall-cmd --permanent --add-service=nfs &&sudo firewall-cmd --permanent --add-service=rpc-bind &&sudo firewall-cmd --permanent --add-service=mountd &&sudo firewall-cmd --reload

sudo dnf install git

sudo -y dnf install vagrant vagrant-libvirt git docker nfs-utils &&Move to a folder where OpenShift source can be stored and clone it down:

sudo firewall-cmd --permanent --add-service=nfs &&

sudo firewall-cmd --permanent --add-service=rpc-bind &&

sudo firewall-cmd --permanent --add-service=mountd &&

sudo firewall-cmd --reload && vagrant plugin install vagrant-openshift

cd originCheck the configuration:

vagrant origin-init --stage inst --os centos7 openshift

cat .vagrant-openshift.json | grep centos7Should Produce following:

"os": "centos7",

"box_name": "centos7_inst",

"box_url": "http://mirror.openshift.com/pub/vagrant/boxes/openshift3/centos7_virtualbox_inst.box"

"box_name": "centos7_base",

"box_url": "http://mirror.openshift.com/pub/vagrant/boxes/openshift3/centos7_libvirt_inst.box"

"ssh_user": "centos"

"ssh_user": "centos"

vagrant up

vagrant ssh

. /data/src/examples/sample-app/pullimages.sh

# Download the binary

curl -L https://github.com/openshift/origin/releases/download/v1.0.0/openshift-origin-v1.0.0-67617dd-linux-amd64.tar.gz | tar xzv

# Change to root user (for below scripts)

sudo su -

# http://fabric8.io/guide/openShiftInstall.html

export OPENSHIFT_MASTER=https://$(hostname -I | cut -d ' ' -f1):8443

echo $OPENSHIFT_MASTER

export PATH=$PATH:$(pwd)

# Create the log directory in advance

mkdir -p /var/lib/openshift/

chmod 755 /var/lib/openshift

# Remove previous generated config if anyrm -rf openshift.local.config

nohup openshift start \

--cors-allowed-origins='.*' \

--master=$OPENSHIFT_MASTER \

--volume-dir=/var/lib/openshift/openshift.local.volumes \

--etcd-dir=/var/lib/openshift/openshift.local.etcd \

> /var/lib/openshift/openshift.log &

tail -f /var/lib/openshift/openshift.log

ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500In the Vagrant VM, start following command,

inet 192.168.121.169 netmask 255.255.255.0 broadcast 192.168.121.255

inet6 fe80::5054:ff:fec6:ba8d prefixlen 64 scopeid 0x20<link>

ether 52:54:00:c6:ba:8d txqueuelen 1000 (Ethernet)

RX packets 184375 bytes 442794303 (422.2 MiB)

RX errors 0 dropped 11 overruns 0 frame 0

TX packets 119825 bytes 15028821 (14.3 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

# Link the current config

mkdir -p ~/.kube

ln -s `pwd`/openshift.local.config/master/admin.kubeconfig ~/.kube/config

export CURL_CA_BUNDLE=`pwd`/openshift.local.config/master/ca.crt

sudo chmod a+rwX openshift.local.config/master/admin.kubeconfig

sudo chmod +r openshift.local.config/master/openshift-registry.kubeconfig

# Create local registryoadm registry --create --credentials=openshift.local.config/master/openshift-registry.kubeconfig --config=openshift.local.config/master/admin.kubeconfig

# Check the statusoc describe service docker-registry --config=openshift.local.config/master/admin.kubeconfig

oc login --certificate-authority=openshift.local.config/master/ca.crt -u test-admin -p test-admin

# Create new project

oc new-project test --display-name="OpenShift 3 WildFly" --description="This is a test sample project to test WildFly on OpenSHift 3"

# Create new app

oc new-app -f https://raw.githubusercontent.com/bparees/javaee7-hol/master/application-template-jeebuild.json

# Trace the build

oc build-logs jee-sample-build-1

I0628 04:40:08.327578 1 sti.go:388] Copying built war files into /wildfly/standalone/deployments for later deployment...

I0628 04:40:08.352066 1 sti.go:388] Copying config files from project...

I0628 04:40:08.353790 1 sti.go:388] ...done

I0628 04:40:16.662515 1 sti.go:96] Using provided push secret for pushing 172.30.6.81:5000/test/jee-sample image

I0628 04:40:16.662562 1 sti.go:99] Pushing 172.30.6.81:5000/test/jee-sample image ...

I0628 04:40:24.410955 1 sti.go:103] Successfully pushed 172.30.6.81:5000/test/jee-sample

oc describe services

NAME LABELS SELECTOR IP(S) PORT(S)

frontend template=application-template-jeebuild name=frontend 172.30.88.128 8080/TCP

mysql template=application-template-jeebuild name=mysql 172.30.250.39 3306/TCP

cd origin

vagrant ssh -- -L 9999:172.30.167.74:8080

oc delete pods,services,projects --all

vagrant halt

vagrant destroy --force

mkdir centos_docker_builder;

cd centos_docker_builder;

vagrant box add centos65-x86_64-20140116 https://github.com/2creatives/vagrant-centos/releases/download/v6.5.3/centos65-x86_64-20140116.box

cat << EOF > Vagrantfile

# -*- mode: ruby -*-

# vi: set ft=ruby :

# Vagrantfile API/syntax version. Don't touch unless you know what you're doing!

VAGRANTFILE_API_VERSION = "2"

Vagrant.configure(VAGRANTFILE_API_VERSION) do |config|

config.vm.box = "centos65-x86_64-20140116"

# config.vm.provision "shell", path: "auto_build_setup.sh"

config.vm.synced_folder ".", "/vagrant", :mount_options => ["dmode=777","fmode=666"]

end

EOF

cat << EOFF > start-tomcat.sh

#!/bin/bash

# From https://github.com/arcus-io/docker-tomcat7

ADMIN_USER=${ADMIN_USER:-admin}

ADMIN_PASSWORD=${ADMIN_PASSWORD:-admin}

MAX_UPLOAD_SIZE=${MAX_UPLOAD_SIZE:- 52428800}

cat << EOF > /opt/apache-tomcat/conf/tomcat-users.xml

EOF

if [ -f "/opt/apache-tomcat/webapps/manager/WEB-INF/web.xml" ];

then

chmod 664 /opt/apache-tomcat/webapps/manager/WEB-INF/web.xml

sed -i "s^.*max-file-size.*^\t${MAX_UPLOAD_SIZE} ^g" /opt/apache-tomcat/webapps/manager/WEB-INF/web.xml

sed -i "s^.*max-request-size.*^\t${MAX_UPLOAD_SIZE} ^g" /opt/apache-tomcat/webapps/manager/WEB-INF/web.xml

fi

/bin/sh -e /opt/apache-tomcat/bin/catalina.sh run

EOFF

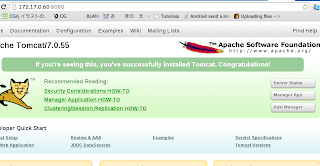

wget http://www.carfab.com/apachesoftware/tomcat/tomcat-7/v7.0.55/bin/apache-tomcat-7.0.55.tar.gz

cat << EOF > build_centos.sh

#!/bin/bash

set -e

## Following script is coming from GitHub from https://github.com/blalor/docker-centos-base

## Thanks for the code

## requires running as root because filesystem package won't install otherwise,

## giving a cryptic error about /proc, cpio, and utime. As a result, /tmp

## doesn't exist.

[ $( id -u ) -eq 0 ] || { echo "must be root"; exit 1; }

tmpdir=$( mktemp -d )

trap "echo removing ${tmpdir}; rm -rf ${tmpdir}" EXIT

febootstrap \

-u http://mirrors.mit.edu/centos/6.5/updates/x86_64/ \

-i centos-release \

-i yum \

-i iputils \

-i tar \

-i which \

-i http://mirror.pnl.gov/epel/6/i386/epel-release-6-8.noarch.rpm \

centos65 \

${tmpdir} \

http://mirrors.mit.edu/centos/6.5/os/x86_64/

febootstrap-run ${tmpdir} -- sh -c 'echo "NETWORKING=yes" > /etc/sysconfig/network'

## set timezone of container to UTC

febootstrap-run ${tmpdir} -- ln -f /usr/share/zoneinfo/Etc/UTC /etc/localtime

febootstrap-run ${tmpdir} -- yum clean all

## xz gives the smallest size by far, compared to bzip2 and gzip, by like 50%!

febootstrap-run ${tmpdir} -- tar -cf - . | xz > centos65.tar.xz

EOF

chmod a+x build_centos.sh

cat << EOF > Dockerfile

From scratch

MAINTAINER Danil Ko

ADD centos6.5.tar.xz /

# Need to update additional selinux packages due to https://bugzilla.redhat.com/show_bug.cgi?id=1098120

RUN yum -y install yum install java-1.7.0-openjdk-devel wget http://mirror.centos.org/centos/6.5/centosplus/x86_64/Packages/libselinux-2.0.94-5.3.0.1.el6.centos.plus.x86_64.rpm http://mirror.centos.org/centos/6.5/centosplus/x86_64/Packages/libselinux-utils-2.0.94-5.3.0.1.el6.centos.plus.x86_64.rpm

# Use copy to preserve the the tar file without untar

COPY apache-tomcat-7.0.55.tar.gz /tmp/

RUN cd /opt; tar -xzf /tmp/apache-tomcat-7.0.55.tar.gz; mv /opt/apache-tomcat* /opt/apache-tomcat; rm /tmp/apache-tomcat-7.0.55.tar.gz;

ADD start-tomcat.sh /usr/local/bin/start-tomcat.sh

EXPOSE 8080

CMD ["/bin/sh", "-e", "/usr/local/bin/start-tomcat.sh"]

EOF

vagrant up

vagrant ssh "cd /vagrant; . build_centos.sh"

vagrant destroy

# Move the centos_docker_builder folder to a docker host machine, in this example, it is the same machine

# Also download the tomcat file and name it as apache-tomcat-7.0.55.tar.gz

docker build -t centosimage .

# Run as a background daemon process and with the container name webtier

docker run -d --name webtier centosimage

# On a separate terminal, run (consider one only have the webtier running, otherwise, one will use docker ps -a to find the container id and use docker inspect )

docker inspect -f '{{ .NetworkSettings.IPAddress }}' webtier | grep IP

"IPAddress": "172.17.0.60",

"IPPrefixLen": 16,

docker stop webtier; docker rm webtier;

docker rmi centosimage;

#!/bin/bash # Clean up gear info ssh $gear_ssh_url "cd git; rm -rf *.git; cd *.git; git init --bare; exit" # Create bare repo to overwrite history (or do a new git clone, it is just I found it is faster just do a new repo and point remote repo) git init current_date=`date` git commit -m "Automatic Push as of $current_date due to code change" git add origin $gear_git_url git push origin master -u --force

#!/bin/bash

# Reference from the article http://stackoverflow.com/questions/11929766/how-to-delete-all-git-commits-except-the-last-five

current_branch="$(git branch --no-color | cut -c3-)" ;

current_head_commit="$(git rev-parse $current_branch)" ;

echo "Current branch: $current_branch $current_head_commit" ;

# A B C D (D is the current head commit), B is new_history_begin_commit

new_history_begin_commit="$(git rev-parse $current_branch~1)" ;

echo "Recreating $current_branch branch with initial commit $new_history_begin_commit ..." ;

git checkout --orphan new_start $new_history_begin_commit ;

git commit -C $new_history_begin_commit ;

git rebase --onto new_start $new_history_begin_commit $current_branch;

git branch -d new_start ;

git reflog expire --expire=now --all;

git gc --prune=now;

# Still require a push for remote to take effect, otherwise the push will not go through as there is no change

if [ -f .invoke_update ];

then

rm -rf .invoke_update;

else

touch .invoke_update;

fi

git add -A .;

current_date=`date`;

git commit -m "Force clean up history $current_date";

git push origin master --force;